What is tokenizer, analyzer and filter in Elasticsearch ?

Elasticsearch is one of the best search engine which helps to setup a search functionality in no time.

The building blocks of any searchengine are tokenizers, token-filters and analyzers. It’s the way the data is processed and stored by the search engine so that it can easily look up. Let’s look at how the tokenizers, analyzers and token filters work and how they can be combined together for building a powerful searchengine using Elasticsearch.

Tokenizers

Tokenization is a process of breaking the strings into sections of strings or terms called tokens based on a certain rule.

Example:

Whitespace tokenizer :

This tokenizer takes the string and breaks the string based on whitespace.

There are numerous tokenizers available which does the tokenization and helps to break the large data into individual chunk of word (known as tokens) and store them for searching.

Other examples of tokenizers.

Letter tokenizer:

Tokenizer which breaks the strings when it encounters anything but a letter.

Ex:

Input => “quick 2 brown’s fox “

Output => [quick,brown,s,fox]

It keeps only letters and removes any special characters or numbers.

Token Filters:

Token filters operate on tokens produced from tokenizers and modify the tokens accordingly.

Example of Token Filters:

Lowercase filter : Lower case filter takes in any token and converts it to lowercase token.

Ex:

Input => “QuicK”

Output => “quick”

Stemmer filter: Stems the words based on certain rules. The stemmer filter can be configured based on many stemmer algorithms available. You can take a look at the different stemmer available here.

Ex 1:

Input => “running”

Output => “run”

Ex 2:

Input => “shoes”

Output => “shoe”

Analyzer:

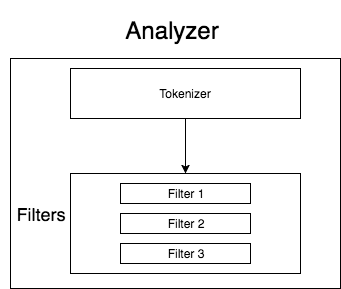

Analyzer is a combination of tokenizer and filters that can be applied to any field for analyzing in Elasticsearch. There are already built in analyzers available in Elasticsearch.

Some of the built in analyzers in Elasticsearch:

1. Standard Analyzer: Standard analyzer is the most commonly used analyzer and it divides the text based based on word boundaries defined by the Unicode Text Segmentation algorithm. It also eliminates all the punctuation, lowercase terms and stopwords.

Ex:

Input => “The 2 QUICK Brown-Foxes jumped over the lazy dog’s bone.”

Output => [quick, brown, fox, jump, over, lazy,dog, bone]

NOTE: It will remove all the punctuations , numbers and stopwords like (the, s).

2. Whitespace Analyzer: Whitespace analyzer divides the text based on the whitespace character. Whitespace analyzer internally uses the whitespace tokenizer to split the data.

Ex:

Input => “quick brown fox” Output => [quick, brown, fox]

Custom Analyzer

As mentioned earlier the analyzer is a combination of tokenizer and filters. You can define your own analyzer based on your needs from the list of available tokenizers and filters.

Let’s look at the ways to define the custom analyzer in Elasticsearch.

{

"analyzer":{

"my_custom_analyzer":{

"type":"custom", // Define the type as custom analyzer

"tokenizer":"standard",//Define the tokenizer

"filter":[ // Define the toke Filter

"uppercase"

]

}

}

}The above analyzer is a custom analyzer with the below settings.

name — my_custom_analyzer

tokenizer — standard

filter — uppercase

Working of the above analyzer:

Input => “Quick Brown Fox”

Output => [QUICK, BROWN, FOX]

Take a look at the below diagram to understand how the data is processed by any analyzer.

Few other examples of custom analyzer.

1 ) Analyzer with stopwords and synonyms

{

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"filter":[

"lowercase",

"english_stop",

"synonyms"

]

}

},

"filter":{

"english_stop":{

"type":"stop",

"stopwords":"_english_"

},

"synonym":{

"type":"synonym",

"synonyms":[

"i-pod, ipod",

"universe, cosmos"

]

}

}

}

}

}Working

Input => I live in this Universe

Output => [live, universe]

The words [I, in , this] are removed as stop-words as they are not very much used for searching.

2) Analyzer with stemmer and stop-words

{

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"tokenizer":"standard",

"filter":[

"lowercase",

"english_stop",

"english_stemmer"

]

}

},

"filter":{

"english_stemmer":{

"type":"stemmer",

"stopwords":"english"

},

"english_stop":{

"type":"stop",

"stopwords":"_english_"

}

}

}

}

}Working

Input => “Learning is fun”

Output => [learn, fun]The word “[is]” is removed as a stopword and “learning” is stemmed to word “learn”.

3) Analyzer with word mappings to replace special characters.

{

"settings":{

"analysis":{

"analyzer":{

"my_custom_analyzer":{

"type":"custom",

"char_filter":[

"replace_special_characters"

],

"tokenizer":"standard",

"filter":[

"lowercase"

]

}

},

"char_filter":{

"replace_special_characters":{

"type":"mapping",

"mappings":[

":) => happy",

":( => sad",

"& => and"

]

}

}

}

}

}Working of the above analyzer:

Input => Good weekend 🙂

Output => [good, weekend, happy]Input => Pride & Prejudice

Output => [Pride, and, Prejudice]

NOTE :

Notice that we used char_filter instead of token_filter here. The reason is the char_filter runs before the tokenizer and hence it prevents the special character like smileys and ampersand characters from being removed. It replaces the special characters with the given mappings and prevents from elimination of special characters. We can also use whitespace tokenizer to preserve the special character and remove it in token filter later.

Thus you can configure your custom analyzers according to your needs and use it for search to obtain better results. The analyzers you can configure are plenty and should be configured wisely based on your data feeds.

To learn more about building a basic search functionality take a look at my post.